Figure 1 - Simulated augmented reality medical image

Figure 1 - Simulated augmented reality medical imageAugmented Reality (AR) is a growing area in virtual reality research. The world environment around us provides a wealth of information that is difficult to duplicate in a computer. This is evidenced by the worlds used in virtual environments. Either these worlds are very simplistic such as the environments created for immersive entertainment and games, or the system that can create a more realistic environment has a million dollar price tag such as flight simulators. An augmented reality system generates a composite view for the user. It is a combination of the real scene viewed by the user and a virtual scene generated by the computer that augments the scene with additional information. The application domains described in Section 1.2 reveal that the augmentation can take on a number of different forms. In all those applications the augmented reality presented to the user enhances that person's performance in and perception of the world. The ultimate goal is to create a system such that the user can not tell the difference between the real world and the virtual augmentation of it. To the user of this ultimate system it would appear that he is looking at a single real scene. Figure 1 shows a view that the user might see from an augmented reality system in the medical domain. It depicts the merging and correct registration of data from a pre-operative imaging study onto the patient's head. Providing this view to a surgeon in the operating theater would enhance their performance and possibly eliminate the need for any other calibration fixtures during the procedure.

Figure 1 - Simulated augmented reality medical image

Figure 1 - Simulated augmented reality medical image

1.1 Augmented Reality vs. Virtual Reality

Virtual reality is a technology that encompasses a broad spectrum of ideas. It defines an umbrella under which many researchers and companies express their work. The phrase was originated by Jaron Lanier the founder of VPL Research one of the original companies selling virtual reality systems. The term was defined as "a computer generated, interactive, three-dimensional environment in which a person is immersed." (Aukstakalnis and Blatner 1992) There are three key points in this definition. First, this virtual environment is a computer generated three-dimensional scene which requires high performance computer graphics to provide an adequate level of realism. The second point is that the virtual world is interactive. A user requires real-time response from the system to be able to interact with it in an effective manner. The last point is that the user is immersed in this virtual environment. One of the identifying marks of a virtual reality system is the head mounted display worn by users. These displays block out all the external world and present to the wearer a view that is under the complete control of the computer. The user is completely immersed in an artificial world and becomes divorced from the real environment. For this immersion to appear realistic the virtual reality system must accurately sense how the user is moving and determine what effect that will have on the scene being rendered in the head mounted display.

The discussion above highlights the similarities and differences between virtual reality and augmented reality systems. A very visible difference between these two types of systems is the immersiveness of the system. Virtual reality strives for a totally immersive environment. The visual, and in some systems aural and proprioceptive, senses are under control of the system. In contrast, an augmented reality system is augmenting the real world scene necessitating that the user maintains a sense of presence in that world. The virtual images are merged with the real view to create the augmented display. There must be a mechanism to combine the real and virtual that is not present in other virtual reality work. Developing the technology for merging the real and virtual image streams is an active research topic and is briefly described in Section 1.3.3.

The computer generated virtual objects must be accurately registered with the real world in all dimensions. Errors in this registration will prevent the user from seeing the real and virtual images as fused. The correct registration must also be maintained while the user moves about within the real environment. Discrepancies or changes in the apparent registration will range from distracting which makes working with the augmented view more difficult, to physically disturbing for the user making the system completely unusable. An immersive virtual reality system must maintain registration so that changes in the rendered scene match with the perceptions of the user. Any errors here are conflicts between the visual system and the kinesthetic or proprioceptive systems. The phenomenon of visual capture gives the vision system a stronger influence in our perception (Welch 1978). This will allow a user to accept or adjust to a visual stimulus overriding the discrepancies with input from sensory systems. In contrast, errors of misregistration in an augmented reality system are between two visual stimuli which we are trying to fuse to see as one scene. We are more sensitive to these errors (Azuma 1993; Azuma 1995).

Milgram (Milgram and Kishino 1994; Milgram, Takemura et al. 1994) describes a taxonomy that identifies how augmented reality and virtual reality work are related. He defines the Reality-Virtuality continuum shown as Figure 2.

Figure 2 - Milgram's Reality-Virtuality Continuum

The real world and a totally virtual environment are at the two ends of this continuum with the middle region called Mixed Reality. Augmented reality lies near the real world end of the line with the predominate perception being the real world augmented by computer generated data. Augmented virtuality is a term created by Milgram to identify systems which are mostly synthetic with some real world imagery added such as texture mapping video onto virtual objects. This is a distinction that will fade as the technology improves and the virtual elements in the scene become less distinguishable from the real ones.

Milgram further defines a taxonomy for the Mixed Reality displays. The three axes he suggests for categorizing these systems are: Reproduction Fidelity, Extent of Presence Metaphor and Extent of World Knowledge. Reproduction Fidelity relates to the quality of the computer generated imagery ranging from simple wireframe approximations to complete photorealistic renderings. The real-time constraint on augmented reality systems forces them to be toward the low end on the Reproduction Fidelity spectrum. The current graphics hardware capabilities can not produce real-time photorealistic renderings of the virtual scene. Milgram also places augmented reality systems on the low end of the Extent of Presence Metaphor. This axis measures the level of immersion of the user within the displayed scene. This categorization is closely related to the display technology used by the system. There are several classes of displays used in augmented reality systems that are discussed in Section 1.3.3. Each of these gives a different sense of immersion in the display. In an augmented reality system, this can be misleading because with some display technologies part of the "display" is the user's direct view of the real world. Immersion in that display comes from simply having your eyes open. It is contrasted to systems where the merged view is presented to the user on a separate monitor for what is sometimes called a "Window on the World" view.

The third, and final, dimension that Milgram uses to categorize Mixed Reality displays is Extent of World Knowledge. Augmented reality does not simply mean the superimposition of a graphic object over a real world scene. This is technically an easy task. One difficulty in augmenting reality, as defined here, is the need to maintain accurate registration of the virtual objects with the real world image. As will be described in Section 1.3.5, this often requires detailed knowledge of the relationship between the frames of reference for the real world, the camera viewing it and the user. In some domains these relationships are well known which makes the task of augmenting reality easier or might lead the system designer to use a completely virtual environment. The contribution of this thesis will be to minimize the calibration and world knowledge necessary to create an augmented view of the real environment.

1.2 Augmented Reality Application Domains

Only recently have the capabilities of real-time video image processing, computer graphic systems and new display technologies converged to make possible the display of a virtual graphical image correctly registered with a view of the 3D environment surrounding the user. Researchers working with augmented reality systems have proposed them as solutions in many domains. The areas that have been discussed range from entertainment to military training. Many of the domains, such as medical (Rosen, Laub et al. 1996), are also proposed for traditional virtual reality systems. This section will highlight some of the proposed applications for augmented reality.

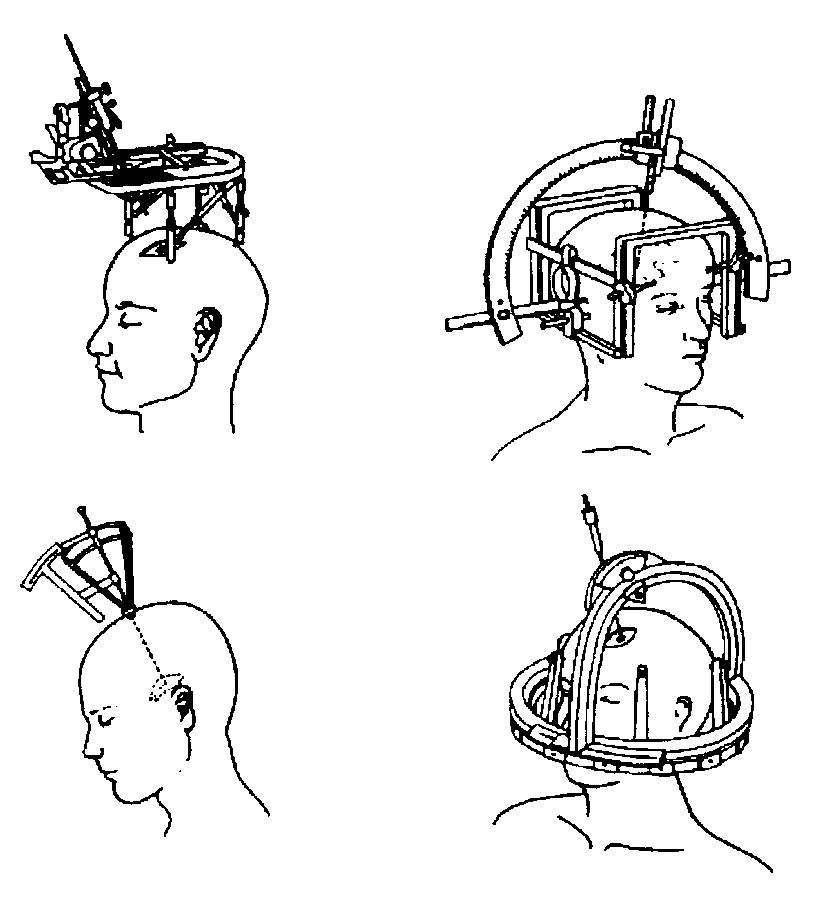

Because imaging technology is so pervasive throughout the medical field, it is not surprising that this domain is viewed as one of the more important for augmented reality systems. Most of the medical applications deal with image guided surgery. Pre-operative imaging studies, such as CT or MRI scans, of the patient provide the surgeon with the necessary view of the internal anatomy. From these images the surgery is planned. Visualization of the path through the anatomy to the affected area where, for example, a tumor must be removed is done by first creating a 3D model from the multiple views and slices in the preoperative study. This is most often done mentally though some systems will create 3D volume visualizations from the image study. Augmented reality can be applied so that the surgical team can see the CT or MRI data correctly registered on the patient in the operating theater while the procedure is progressing. Being able to accurately register the images at this point will enhance the performance of the surgical team and eliminate the need for the painful and cumbersome stereotactic frames shown in Figure 3 that are currently used for registration.

Figure 3 - Typical Stereotactic Frames from (Mellor 1995)

Other work in the area of image guided surgery using augmented reality can be found in (Lorensen, Cline et al. 1993; Grimson, Lozano-Perez et al. 1994; Betting, Feldmar et al. 1995; Grimson, Ettinger et al. 1995; Mellor 1995; Uenohara and Kanade 1995).

Another application for augmented reality in the medical domain is in ultrasound imaging (State, Chen et al. 1994). Using an optical see-through display the ultrasound technician can view a volumetric rendered image of the fetus overlaid on the abdomen of the pregnant woman. The image appears as if it were inside of the abdomen and is correctly rendered as the user moves. Information about this prototype system can be found in (Brooks 1995).

A simple form of augmented reality has been in use in the entertainment and news business for quite some time. Whenever you are watching the evening weather report the weather reporter is shown standing in front of changing weather maps. In the studio the reporter is actually standing in front of a blue or green screen. This real image is augmented with computer generated maps using a technique called chroma-keying. It is also possible to create a virtual studio environment so that the actors can appear to be positioned in a studio with computer generated decorating. Examples of using this technique can be found at (Schmidt 1996; Schmidt 1996b).

Movie special effects make use of digital compositing to create illusions (Pyros and Goren 1995). Strictly speaking with current technology this may not be considered augmented reality because it is not generated in real-time. Most special effects are created off-line, frame by frame with a substantial amount of user interaction and computer graphics system rendering. But some work is progressing in computer analysis of the live action images to determine the camera parameters and use this to drive the generation of the virtual graphics objects to be merged (Zorpette 1994).

Princeton Electronic Billboard has developed an augmented reality system that allows broadcasters to insert advertisements into specific areas of the broadcast image (National Association of Broadcasters 1994). For example, while broadcasting a baseball game this system would be able to place an advertisement in the image so that it appears on the outfield wall of the stadium. The electronic billboard requires calibration to the stadium by taking images from typical camera angles and zoom settings in order to build a map of the stadium including the locations in the images where advertisements will be inserted. By using pre-specified reference points in the stadium, the system automatically determines the camera angle being used and referring to the pre-defined stadium map inserts the advertisement into the correct place. The approach used for mapping these planar surfaces is similar to that used by the U of R augmented reality system described in Section 2.

The military has been using displays in cockpits that present information to the pilot on the windshield of the cockpit or the visor of their flight helmet. This is a form of augmented reality display. SIMNET, a distributed war games simulation system, is also embracing augmented reality technology. By equipping military personnel with helmet mounted visor displays or a special purpose rangefinder (Urban 1995) the activities of other units participating in the exercise can be imaged. While looking at the horizon, for example, the display equipped soldier could see a helicopter rising above the tree line (Metzger 1993). This helicopter could be being flown in simulation by another participant. In wartime, the display of the real battlefield scene could be augmented with annotation information or highlighting to emphasize hidden enemy units.

Imagine that a group of designers are working on the model of a complex device for their clients. The designers and clients want to do a joint design review even though they are physically separated. If each of them had a conference room that was equipped with an augmented reality display this could be accomplished. The physical prototype that the designers have mocked up is imaged and displayed in the client's conference room in 3D. The clients can walk around the display looking at different aspects of it. To hold discussions the client can point at the prototype to highlight sections and this will be reflected on the real model in the augmented display that the designers are using. Or perhaps in an earlier stage of the design, before a prototype is built, the view in each conference room is augmented with a computer generated image of the current design built from the CAD files describing it. This would allow real time interaction with elements of the design so that either side can make adjustments and changes that are reflected in the view seen by both groups (Ahlers, Kramer et al. 1995). A technique for interactively obtaining a model for 3D objects called 3D stenciling that takes advantage of an augmented reality display is being investigated in our department by Kyros Kutulakos.

1.2.5 Robotics and Telerobotics

In the domain of robotics and telerobotics an augmented display can assist the user of the system (Kim, Schenker et al. 1993; Milgram, Zhai et al. 1993). A telerobotic operator uses a visual image of the remote workspace to guide the robot. Annotation of the view would still be useful just as it is when the scene is in front of the operator. There is an added potential benefit. Since often the view of the remote scene is monoscopic, augmentation with wireframe drawings of structures in the view can facilitate visualization of the remote 3D geometry. If the operator is attempting a motion it could be practiced on a virtual robot that is visualized as an augmentation to the real scene. The operator can decide to proceed with the motion after seeing the results. The robot motion could then be executed directly which in a telerobotics application would eliminate any oscillations caused by long delays to the remote site. More information about augmented reality in robotics can be found at (Milgram 1995).

1.2.6 Manufacturing, Maintenance and Repair

When the maintenance technician approaches a new or unfamiliar piece of equipment instead of opening several repair manuals they could put on an augmented reality display. In this display the image of the equipment would be augmented with annotations and information pertinent to the repair. For example, the location of fasteners and attachment hardware that must be removed would be highlighted. Then the inside view of the machine would highlight the boards that need to be replaced (Feiner, MacIntyre et al. 1993; Uenohara and Kanade 1995). An example of augmented reality being used for maintenance can be seen at (Feiner 1995). The military has developed a wireless vest worn by personnel that is attached to an optical see-through display (Urban 1995). The wireless connection allows the soldier to access repair manuals and images of the equipment. Future versions might register those images on the live scene and provide animation to show the procedures that must be performed.

Boeing researchers are developing an augmented reality display to replace the large work frames used for making wiring harnesses for their aircraft (Caudell 1994; Sims 1994). Using this experimental system, the technicians are guided by the augmented display that shows the routing of the cables on a generic frame used for all harnesses. The augmented display allows a single fixture to be used for making the multiple harnesses.

Virtual reality systems are already used for consumer design. Using perhaps more of a graphics system than virtual reality, when you go to the typical home store wanting to add a new deck to your house, they will show you a graphical picture of what the deck will look like. It is conceivable that a future system would allow you to bring a video tape of your house shot from various viewpoints in your backyard and in real time it would augment that view to show the new deck in its finished form attached to your house. Or bring in a tape of your current kitchen and the augmented reality processor would replace your current kitchen cabinetry with virtual images of the new kitchen that you are designing.

Applications in the fashion and beauty industry that would benefit from an augmented reality system can also be imagined. If the dress store does not have a particular style dress in your size an appropriate sized dress could be used to augment the image of you. As you looked in the three sided mirror you would see the image of the new dress on your body. Changes in hem length, shoulder styles or other particulars of the design could be viewed on you before you place the order. When you head into some high-tech beauty shops today you can see what a new hair style would look like on a digitized image of yourself. But with an advanced augmented reality system you would be able to see the view as you moved. If the dynamics of hair are included in the description of the virtual object you would also see the motion of your hair as your head moved.

1.3 An Augmented Reality System

This section will describe the components that make up a typical augmented reality system. Despite the different domains discussed in Section 1.2 in which augmented reality systems are being applied, the systems have common subcomponents. This discussion will highlight how augmented reality is an area where multiple technologies blend together into a single system. The fields of computer vision, computer graphics and user interfaces are actively contributing to advances in augmented reality systems.

Typical Augmented Reality System

A standard virtual reality system seeks to completely immerse the user in a computer generated environment. This environment is maintained by the system in a frame of reference registered with the computer graphic system that creates the rendering of the virtual world. For this immersion to be effective, the egocentered frame of reference maintained by the user's body and brain must be registered with the virtual world reference. This requires that motions or changes made by the user will result in the appropriate changes in the perceived virtual world. Because the user is looking at a virtual world there is no natural connection between these two reference frames and a connection must be created (Azuma 1993). An augmented reality system could be considered the ultimate immersive system. The user can not become more immersed in the real world. The task is to now register the virtual frame of reference with what the user is seeing. As mentioned in Section 1.1, this registration is more critical in an augmented reality system because we are more sensitive to visual misalignments than to the type of vision-kinesthetic errors that might result in a standard virtual reality system. Figure 4 shows the multiple reference frames that must be related in an augmented reality system.

Figure 4 - Components of an Augmented Reality System

The scene is viewed by an imaging device, which in this case is depicted as a video camera. The camera performs a perspective projection of the 3D world onto a 2D image plane. The intrinsic (focal length and lens distortion) and extrinsic (position and pose) parameters of the device determine exactly what is projected onto its image plane. The generation of the virtual image is done with a standard computer graphics system. The virtual objects are modeled in an object reference frame. The graphics system requires information about the imaging of the real scene so that it can correctly render these objects. This data will control the synthetic camera that is used to generate the image of the virtual objects. This image is then merged with the image of the real scene to form the augmented reality image.

The video imaging and graphic rendering described above is relatively straight forward. The research activities in augmented reality center around two aspects of the problem. One is to develop methods to register the two distinct sets of images and keep them registered in real time. Some new work in this area has started to make use of computer vision techniques. The second direction of research is in display technology for merging the two images. Section 1.3.3 will briefly discuss aspects of the research in display technology. Sections 1.3.4 and 1.3.5 will discuss the current approaches to registration of the various frames of reference in the system.

1.3.2Performance Issues n an Augmented Reality System

Augmented reality systems are expected to run in real-time so that a user will be able to move about freely within the scene and see a properly rendered augmented image. This places two performance criteria on the system. They are:

Visually the real-time constraint is manifested in the user viewing an augmented image in which the virtual parts are rendered without any visible jumps. To appear without any jumps, a standard rule of thumb is that the graphics system must be able to render the virtual scene at least 10 times per second. This is well within the capabilities of current graphics systems for simple to moderate graphics scenes. For the virtual objects to realistically appear part of the scene more photorealistic graphics rendering is required. The current graphics technology does not support fully lit, shaded and ray-traced images of complex scenes. Fortunately, there are many applications for augmented reality in which the virtual part is either not very complex or will not require a high level of photorealism.

Failures in the second performance criterion have two possible causes. One is a misregistration of the real and virtual scene because of noise in the system. The position and pose of the camera with respect to the real scene must be sensed. Any noise in this measurement has the potential to be exhibited as errors in the registration of the virtual image with the image of the real scene. Fluctuations of values while the system is running will cause jittering in the viewed image. As mentioned previously, our visual system is very sensitive to visual errors which in this case would be the perception that the virtual object is not stationary in the real scene or is incorrectly positioned. Misregistrations of even a pixel can be detected under the right conditions (see Section 1.3.4). The second cause of misregistration is time delays in the system. As mentioned in the previous paragraph, a minimum cycle time of 0.1 seconds is needed for acceptable real-time performance. If there are delays in calculating the camera position or the correct alignment of the graphics camera then the augmented objects will tend to lag behind motions in the real scene. The system design should minimize the delays to keep overall system delay within the requirements for real-time performance.

1.3.3 Display Technologies in Augmented Reality

The combination of real and virtual images into a single image presents new technical challenges for designers of augmented reality systems. How to do this merging of the two images is a basic decision the designer must make. Section 1.1 discussed the continuum that Milgram (Milgram and Kishino 1994; Milgram, Takemura et al. 1994) uses to categorize augmented reality systems. His Extent of Presence Metaphor directly relates to the display that is used. At one end of the spectrum is monitor based viewing of the augmented scene. This has sometimes been referred to as "Window on the World" (Feiner, MacIntyre et al. 1993) or Fish Tank virtual reality (Ware, Arthur et al. 1993). The user has little feeling of being immersed in the environment created by the display. This technology, diagrammed in Figure 5, is the simplest available. It is the technology that the U of R augmented reality demonstration (Section 2.6) uses as do several other systems in the literature (Drascic, Grodski et al. 1993; Ahlers, Breen et al. 1994).

Figure 5 - Monitor Based Augmented Reality

To increase the sense of presence other display technologies are needed. Head-mounted displays (HMD) have been widely used in virtual reality systems. Augmented reality researchers have been working with two types of HMD. These are called video see-through and optical see-through. The "see-through" designation comes from the need for the user to be able to see the real world view that is immediately in front of him even when wearing the HMD. The standard HMD used in virtual reality work gives the user complete visual isolation from the surrounding environment. Since the display is visually isolating the system must use video cameras that are aligned with the display to obtain the view of the real world. A diagram of a video see-through system is shown in Figure 6. This can be seen to actually be the same architecture as the monitor based display described above except that now the user has a heightened sense of immersion in the display.

Figure 6 - Video See-through Augmented Reality Display

The optical see-through HMD (Manhart, Malcolm et al. 1993) eliminates the video channel that is looking at the real scene. Instead, as shown in Figure 7, the merging of real world and virtual augmentation is done optically in front of the user. This technology is similar to heads up displays (HUD) that commonly appear in military airplane cockpits and recently some experimental automobiles. In this case, the optical merging of the two images is done on the head mounted display, rather than the cockpit window or auto windshield, prompting the nickname of HUD on a head.

Figure 7 - Optical See-through Augmented Reality Display

There are advantages and disadvantages to each of these types of displays. They are discussed in greater detail by Azuma (Azuma 1995). There are some performance issues, however, that will be highlighted here. With both of the displays that use a video camera to view the real world there is a forced delay of up to one frame time to perform the video merging operation. At standard frame rates that will be potentially a 33.33 millisecond delay in the view seen by the user. Since everything the user sees is under system control compensation for this delay could be made by correctly timing the other paths in the system. Or, alternatively, if other paths are slower then the video of the real scene could be delayed. With an optical see-through display the view of the real world is instantaneous so it is not possible to compensate for system delays in other areas. On the other hand, with monitor based and video see-through displays a video camera is viewing the real scene. An advantage of this is that the image generated by the camera is available to the system to provide tracking information. The U of R augmented reality system exploits this advantage. The optical see-through display does not have this additional information. The only position information available with that display is what can be provided by position sensors on the head mounted display itself.

1.3.4 Tracking Requirements in Augmented Reality

Tracking the position and motions of the user in virtual reality systems has been the subject of a wide array of research. One of the most commonly used methods to track position and orientation is with a magnetic sensor such as the Polhemus Isotrac. Position tracking is needed in virtual reality to instruct the graphics system to render a view of the world from the user's new position. Because of the phenomenon of visual capture mentioned in Section 1.1, the user of a virtual reality system will tolerate, and possibly adapt to, errors between their perceived motion and what visually results. With an augmented reality system the registration is with the visual field of the user. The type of display used by the augmented reality system will determine the accuracy needed for registration of the real and virtual images. The central fovea of a human eye has a resolution of about 0.5 min of arc (Jain 1989). In this area the human eye is capable of differentiating alternating brightness bands that subtend one minute of arc. That capability defines the ultimate registration goal for an augmented reality system. The resolution of the virtual image is directly mapped over this real world view when an optical see-through display is used. If it is a monitor or video-see through display then both the real and virtual worlds are reduced to the resolution of the display device. Considering one of the Sony cameras that are in the lab, these have CCD sensors of 8.8 x 6.6 mm (horizontal x vertical) with a cell arrangement of 768 x 493. Using a mid-range 20 mm lens yields a 25 field of view across the standard 512 horizontal digitization which is 0.05/digitized-pixel or 2.5 minutes of arc/pixel. This indicates that single pixel differences are resolvable in the central fovea. The current technology for head tracking specifies an orientation accuracy of 0.15 (Polhemus Corporation 1996) falling short of what is needed to maintain single pixel alignment on augmented reality displays. These magnetic trackers also introduce errors caused by any surrounding metal objects in the environment. This appears as an error in position and orientation that can not be easily modeled and will change if any of the interfering objects move. In addition, measurement delays have been found in the 40 to 100 msec range for typical position sensors (Adelstein, Johnston et al. 1992) which is a significant part of the 100 msec cycle time needed for real-time operation. Augmented reality researchers are looking at hybrid techniques for tracking (Azuma 1993; Zikan, Curtis et al. 1994).

1.3.5 Previous Approaches to Augmented Reality

An augmented reality system can be viewed as a collection of the related reference frames shown in Figure 4. Correct registration of a virtual image over the real scene requires the system to represent the two images in the same frame of reference. In the real world these frames are all expressed in a 3D Euclidean system. Most previous work in augmented reality has used that Euclidean system and carefully controlled and measured the relationships between these various reference frames. To do this requires tracking the position of the user in 3D Euclidean space and accurate knowledge of camera calibration parameters throughout the entire sequence (Azuma 1993; Janin, Mizell et al. 1993; Azuma and Bishop 1994; Tuceryan, Greer et al. 1995). Position tracking, such as with the commonly used electromagnetic Polhemus sensor, and camera calibration techniques are error prone in operation resulting in misregistration of the real and virtual images. In a keynote address at the IEEE 1996 Virtual Reality International Symposium, Fred Brooks from the Department of Computer Science at the University of North Carolina - Chapel Hill, well known in virtual reality research, stated that he did not believe that position tracking in augmented reality systems would ever work well enough to be the sole tracking technology because of the inaccuracies and delays in the system. (Durlach and Mavor 1995) comes to the same conclusion and suggests that the most promising technique may combine standard position tracking for gross registration and an image based method for the final fine tuning.

There has only been a small amount of work that tries to mitigate or eliminate the errors due to tracking and calibration by using image processing of the live video data (Bajura and Neumann 1995; Wloka and Anderson 1995). A few recent augmented reality systems (Mellor 1995; Ravela, Draper et al. 1995) neither rely on a method for tracking the position of the camera nor require information about the calibration parameters of that camera. The problem of registering the virtual objects over the live video is solved as a pose estimation problem. By tracking feature points in the video image these systems invert the projection operation performed by the camera and estimate the camera's parameters. This does, however, require knowledge of the Euclidean 3D location of the feature points so that the camera parameters can be estimated in a Euclidean frame. Two of the systems (Grimson, Lozano-Perez et al. 1994; Grimson, Ettinger et al. 1995; Mellor 1995; Mellor 1995) use a laser range finder to obtain this 3D data. Requiring the precise location for the feature points places a restriction on what features can be used for tracking returning to a somewhat different calibration problem.

One common theme of this previous work is that all the reference frames are defined in a 3D Euclidean space. To extract this information from the image of the real scene is an error prone process. By relaxing the requirement that all frames have a Euclidean definition it is possible to eliminate the need for this precise calibration and tracking. An approach similar to the one used by the U of R augmented reality system is described by Uenohara and Kanade (Uenohara and Kanade 1995). They visually track markers on a 2D surface and use that for registration of the virtual objects. The U of R augmented reality system requires no a priori metric information about the intrinsic and extrinsic parameters of the camera, where the user is located in the world or the position of objects in the world. The capacity to operate with no calibration information is achieved by using an affine representation to represent all reference frames.

Page last modified: 22 August 2002 02:15 PM; jvallino@mail.rit.edu